Designing, developing, and deploying artificial intelligence systems: Lessons from and for the public sector - part three

Previous Center authors Kevin Desouza and Gregory Dawson and I recently wrote a paper on Artificial Intelligence and the public sector that was published in Business Horizons, a Journal of the Kelley School of Business, Indiana University. This article will appear on our blog in a three-part series to include background information on AI and cognitive computing; designing, developing, and deploying cognitive computing systems, and harnessing new technologies. This blog is the third in the three-part series.

Developing cognitive computing systems

Best practices for developing a CCS are the same as those necessary for developing non-cognitive systems: Organizations must uncover requirements, follow a robust methodology, and involve skilled developers, project managers, and users to ensure successful development. In this section, we address some of the key differences in developing a CCS versus other systems.

CCSs learn from data, and thus the focus is on being able to understand the data and potential biases (Wiggers, 2018). Wiggers (2018) identified notable examples of these biases:

- An AI system was used to predict whether a defendant would commit a future crime. The algorithm was found to overstate the risk for black defendants versus white defendants.

- A study by Boston University found that datasets used to teach AI systems contained “sexist semantic connections” and, for example, considered “programmer” to be more masculine than feminine.

- A study by MIT found that facial recognition software was 12% more likely to misidentify black males from white males. The problem of bias is real and needs to be addressed in the development stage of the CCS.

New York City confronted this challenge with efforts to make algorithms more accountable (Powles, 2017). A 2018 law created a task force to examine the city’s CCSs, which guide allocation and deployment of resources ranging from police officers and firefighters to public housing and food stampsdto make sure data and support algorithms are fair and transparent. As James Vacca, a New York City Council member, said: “If we’re going to be governed by machines and algorithms and data, well, they better be transparent” (Powles, 2017). An initial part of this legislation, since dropped, would have required a city agency to turn over the source code of any new CCS to the public and also to simulate the algorithm’s performance using data provided by New Yorkers.

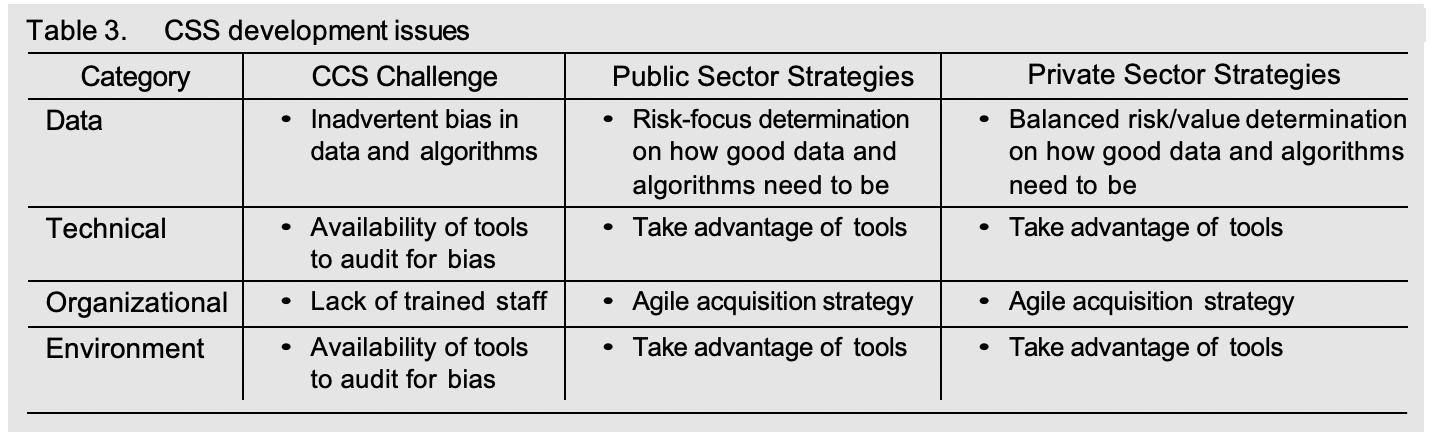

Since systems will support decisions based on data, there is value in understanding the data and its biases; the data and its algorithms need to be checked and validated prior to deployment. In determining the quality of an algorithm, it is necessary to envision the risk dimension we display in Table 1. For the public sector, the risk dimension remains much more salient; as the risk of making a bad decision rises from low to high, more evaluation of the data and its algorithms becomes necessary. For the private sector, a more balanced assessment of risk versus value drives decision making.

The technical dimension of deploying CCSs has improved over time due to the development of open-source tools for auditing algorithms. In 2018, a New York-based AI startup announced that it had decided to open source its audit tool, used “to determine whether a specific statistic or trait fed into an algorithm is being favored or disadvantaged at a statistically significant, systematic rate, leading to adverse impact on people underrepresented in the dataset” (Johnson, 2018). However, the tool does not actually remove the bias but merely points the bias out to the developer. The release of such open-source tools comes as the industry continues to create tools that can also detect biases (Wiggers, 2018).

Organizational issues in CCSs are probably the most challenging since it likely will not have people with the requisite skills in-house. In the public sector, this has been partially solved with the use of agile acquisition practices. Agile acquisition is a procurement strategy that “integrates planning, design, development, and testing into an iterative lifecycle to deliver small, frequent, incremental capability to the end user..[it] requires integrated government and contractor processes and partnerships” (MITRE, 2019). The proposal process differs at the government level because it can require the bidders to complete a sprint rather than a written or oral proposal (White, Sateri, & Chawanasunthornpot, 2018). Although not yet adopted for CSS development by any known government entity, agile acquisition can dramatically lower the risk associated with development. Rather than attempting to acquire an entire system with a single procurement and risky and lengthy endeavor the government can buy individual pieces of the system. Given the newness of CCS development in government, this should dramatically lower the risk.

Private sector firms can adopt agile acquisition for the development of their CCSs since the same risk reduction goals exist as with the public sector, and the use of this strategy should build skill levels available to the organization. Environmental issues closely track to technical issues during the development phase. The right tools can aid in the development of the CCS, many of which can tackle biases in the data. Given the expansion of open- source tools, the adoption of such approaches should increase significantly. Table 3 summarizes our CSS development findings.

While a great deal of work goes into CCS deployment, significant amount of work needs to happen after deployment. Two aspects are particularly salient. First, these systems must be audited. While all systems need monitoring, continuously learning CCSs can learn from the wrong data. Algorithmic biases can easily sneak into a CCS, and the organization needs to understand the risk and preemptively test for it. In 2016, the Obama Administration directly called on companies to audit their algorithms. DJ Patil, former chief data scientist for the U.S. said at the time: “Right now the ability of algorithm to be evaluated is an open question. We don’t know technically how to take the tech box and verify it” (Hempel, 2018). Unfortunately, there are few standards for auditing an algorithm; even if such criteria existed, a company may have to disclose trade secrets to the auditor. Singapore and Korea recently announced the formation of an AI ethics board, as have various industry groups.

An audit could have value by revealing that a company does not discriminate against different classes of individuals. By passing an audit, the company could use marketing success as a sort of “Good Housekeeping seal of approval, signaling to customers a higher standard of ethics and building trust” (Hempel, 2018). In New York, a rich market is developing ways to examine and certify algorithms. In the New York City rental market, one company has developed a program to grade the maintenance of every rental building in the U.S. Using information from 311 phone calls to the citydwhich lodge violation complaints against the building this program calculates the grades and will certify it for an additional fee of $100-$1000 per year. An external examiner evaluated the program’s logic and found that the algorithm was fair (Hempel, 2018).

Second, the value of the CCS needs to be determined. This taps the external dimension. The public sector must consider value more holistically than simply efficiency gains. The concept of public value (Osborne, Radnor, & Nasi, 2013) has a rich history in the public management literature. Common elements captured in various public values frameworks include equity, fairness, promotion of the common good and public interest, protecting the vulnerable and human dignity, economic sustainability of the program/initiative, and upholding the rule of law (e.g., Benington & Moore, 2011; Chohan & Jacobs, 2018; Jørgensen & Bozeman, 2007).

Organizations should examine efforts in the public sector to consider more holistic approaches to capturing value from CCSs. We recommend thinking of value capture along four dimensions, and the output of this thinking can be reflected in the value/risk typology we presented in Table 1. The following value can be derived:

- Process gains: costs or resources saved, time cut from processes, etc.;

- Output gains: being more effective;

- Outcome gains: transforming the customer experience and acting ethically; and

- Network gains: shaping interactions with other stakeholders due to the CCSs, adding to brand and leadership position, capturing new value from an existing supply network, and adding value through new affordances and influences to the overall ecosystem through disruption and transformation.

The first two address the organization ensuring that it ‘advances the business of today’ through CCSs, while the last two ensure that it focuses on ‘advancing the business of tomorrow’ and being relevant in the age of CSSs. We summarize the issues in Table 4.

Harnessing new technologies

CCSs will change the nature of computing in a dramatic way, and we are seeing the benefits of their implementation in all aspects of government and private industry. We are entering what futurists already call the Fourth Industrial Revolution (Schwab, 2017). In this fourth revolution, technologies allow a convergence of computing power as transformative as the previous revolutions, but with much greater reach.

On the positive side, this means the world may become more connected than ever before, dramatically improving the efficiency of organizations and potentially even regenerating the damage of previous revolutions. However, as pointed out by Klaus Schwab, this requires organizations to adapt in novel and challenging ways and governments to manage new technologies and address security concerns (Schwab, 2017). Schwab calls for leaders and citizens to work together to harness these new technologies. We concur with this call and recommend a necessary first step to acknowledge that technologies like CCSsintroduce challenges that need new management approaches, like those outlined in this and other articles in this special issue.